|

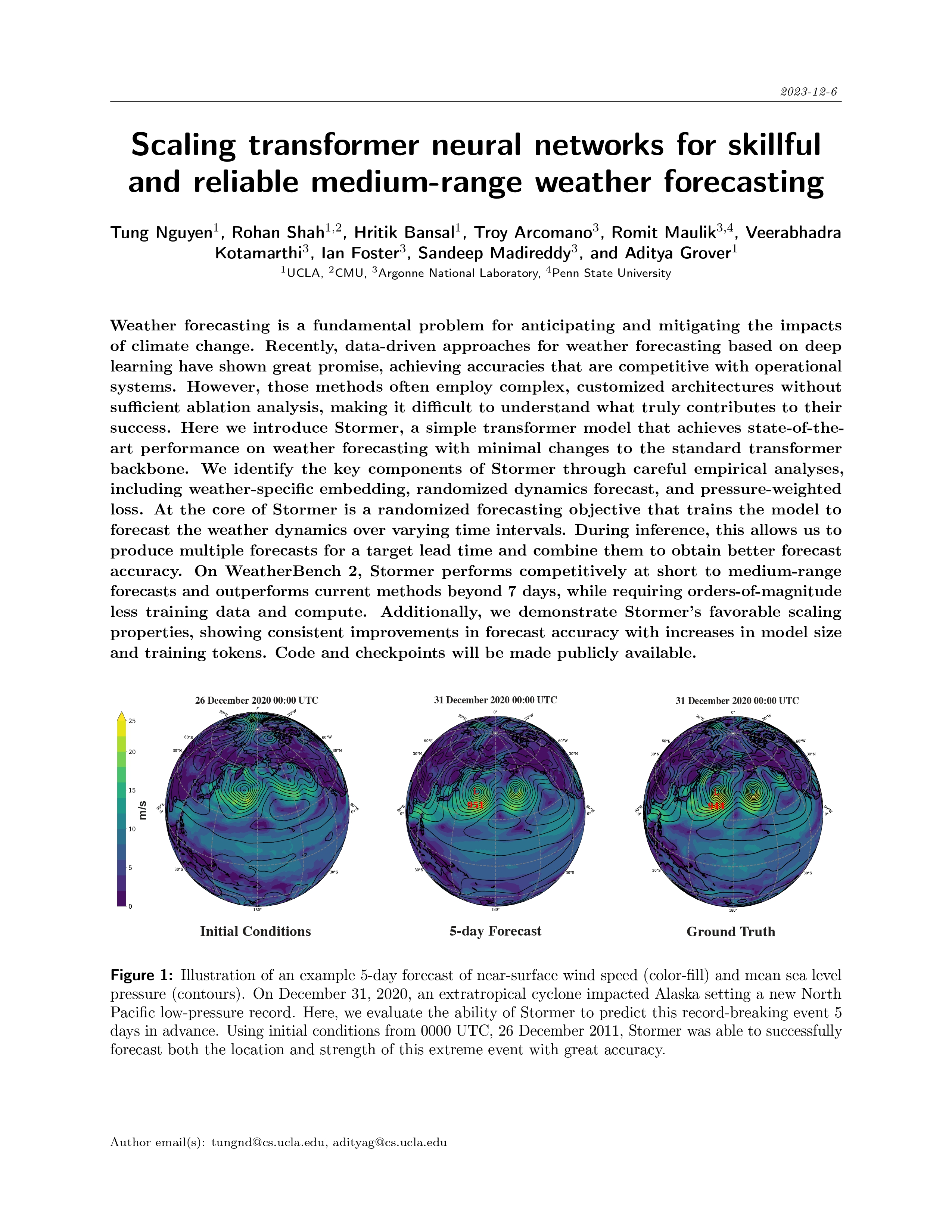

Weather forecasting is a fundamental problem for anticipating and mitigating the impacts

of climate change. Recently, data-driven approaches for weather forecasting based on deep

learning have shown great promise, achieving accuracies that are competitive with operational

systems. However, those methods often employ complex, customized architectures without

sufficient ablation analysis, making it difficult to understand what truly contributes to their

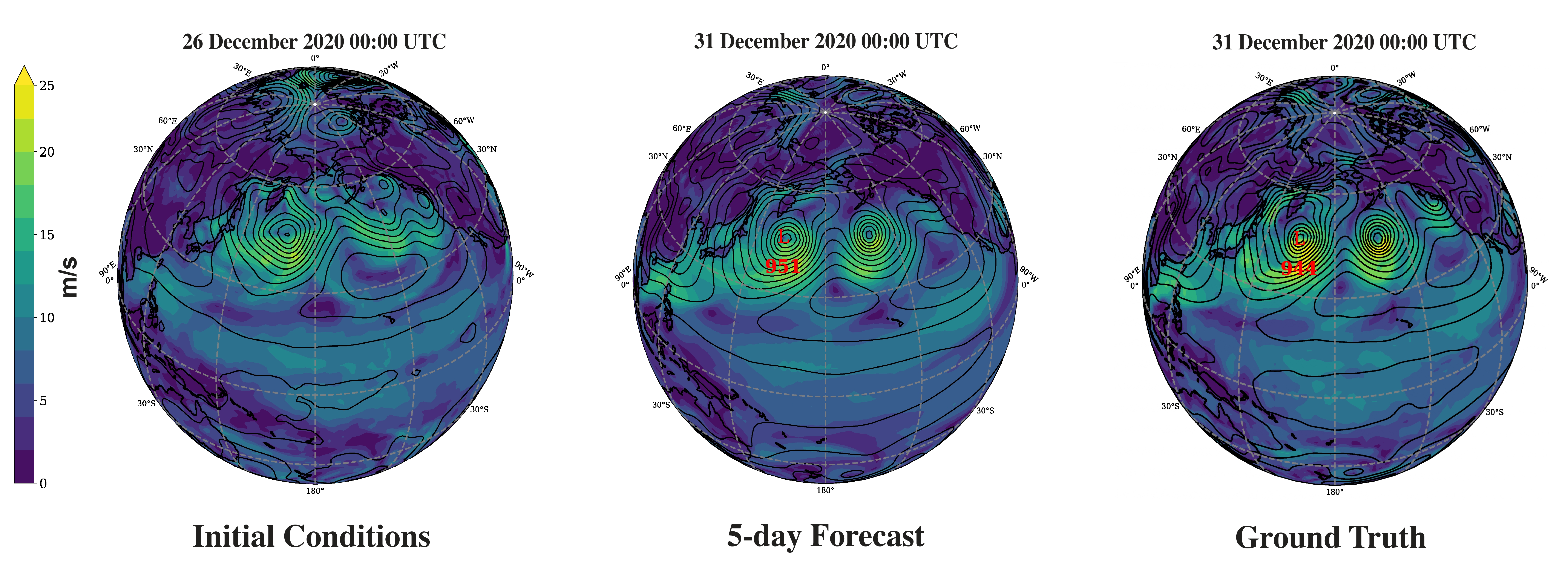

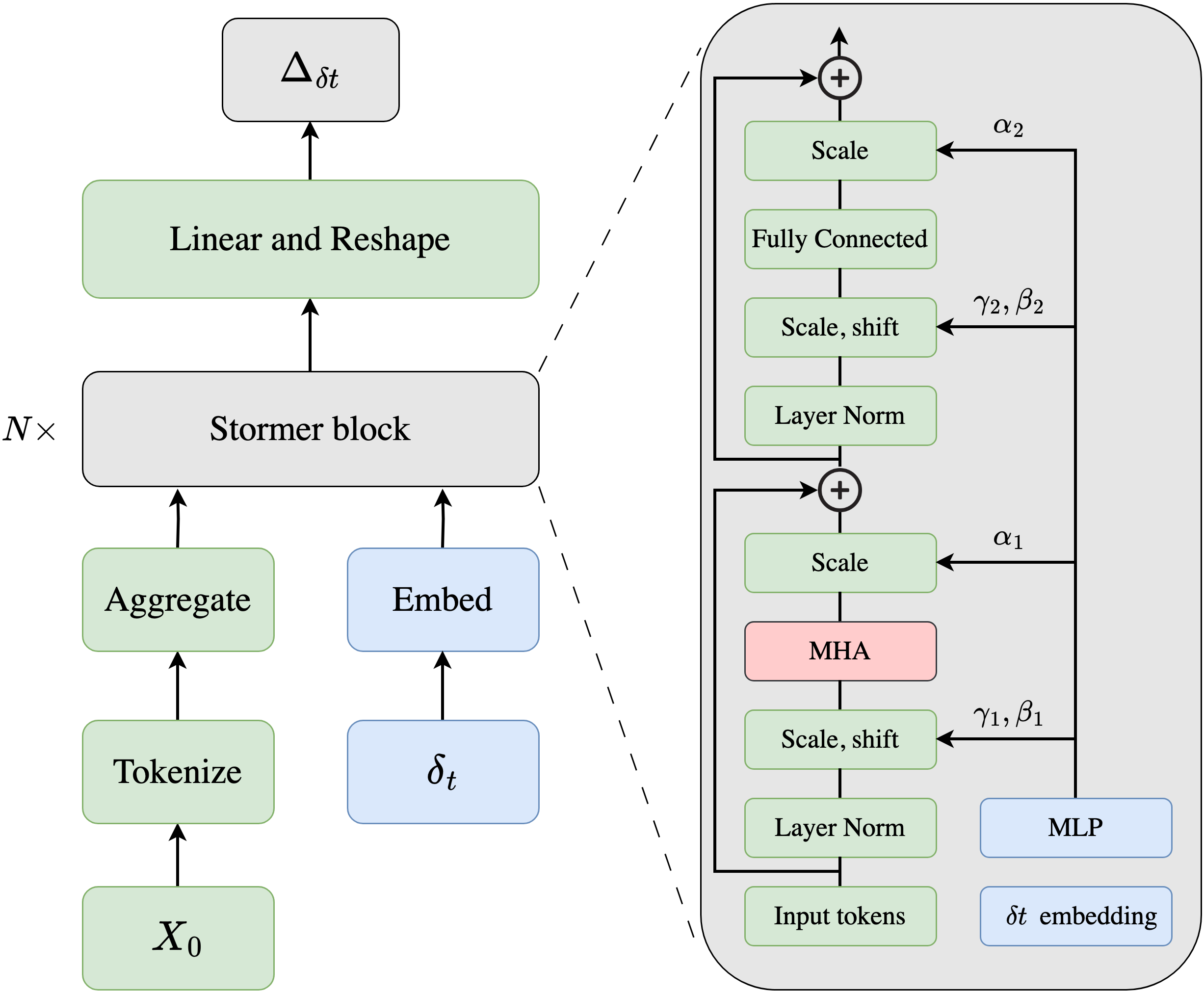

success. Here we introduce Stormer, a simple transformer model that achieves state-of-the-

art performance on weather forecasting with minimal changes to the standard transformer

backbone. We identify the key components of Stormer through careful empirical analyses,

including weather-specific embedding, randomized dynamics forecast, and pressure-weighted

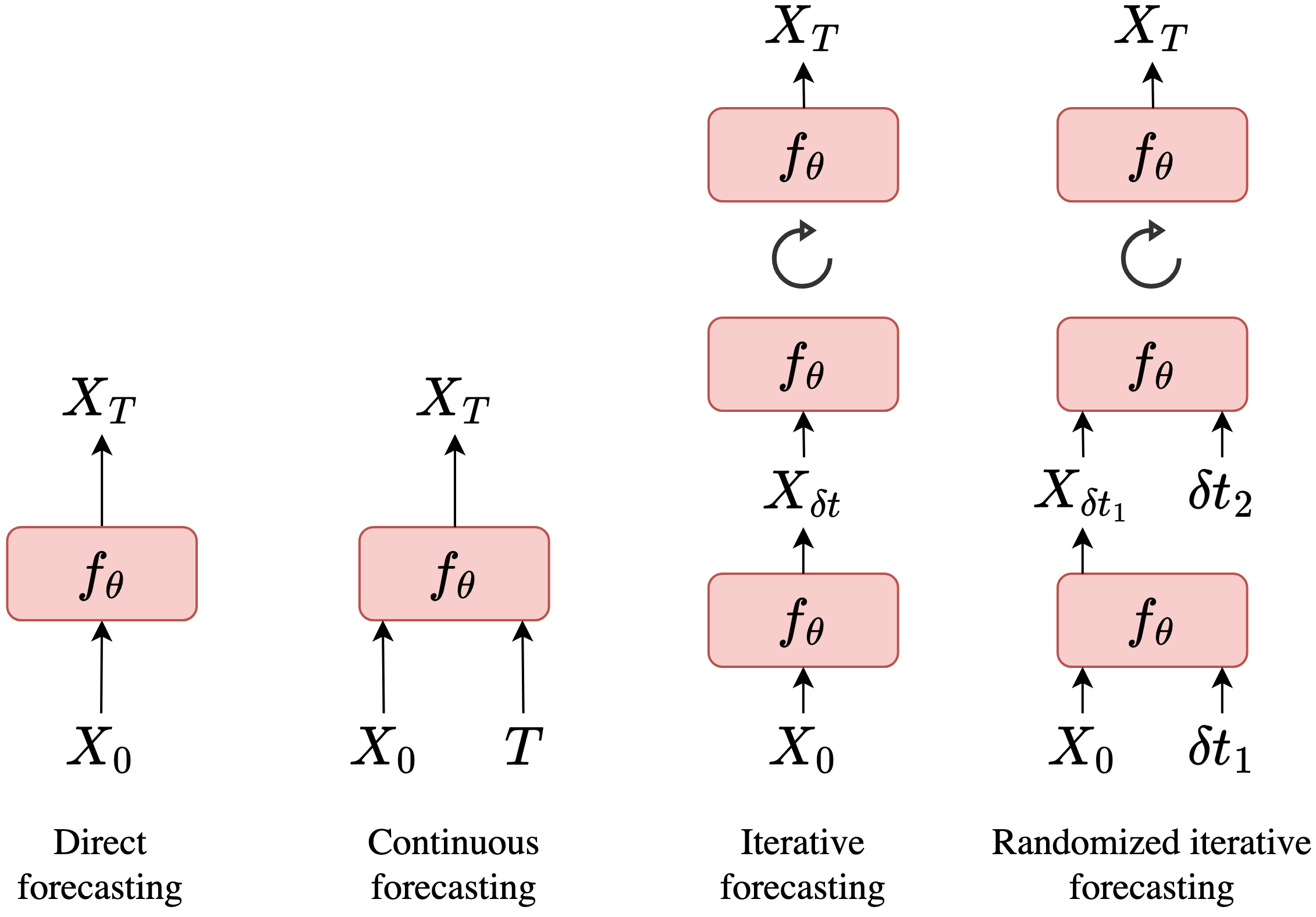

loss. At the core of Stormer is a randomized forecasting objective that trains the model to

forecast the weather dynamics over varying time intervals. During inference, this allows us to

produce multiple forecasts for a target lead time and combine them to obtain better forecast

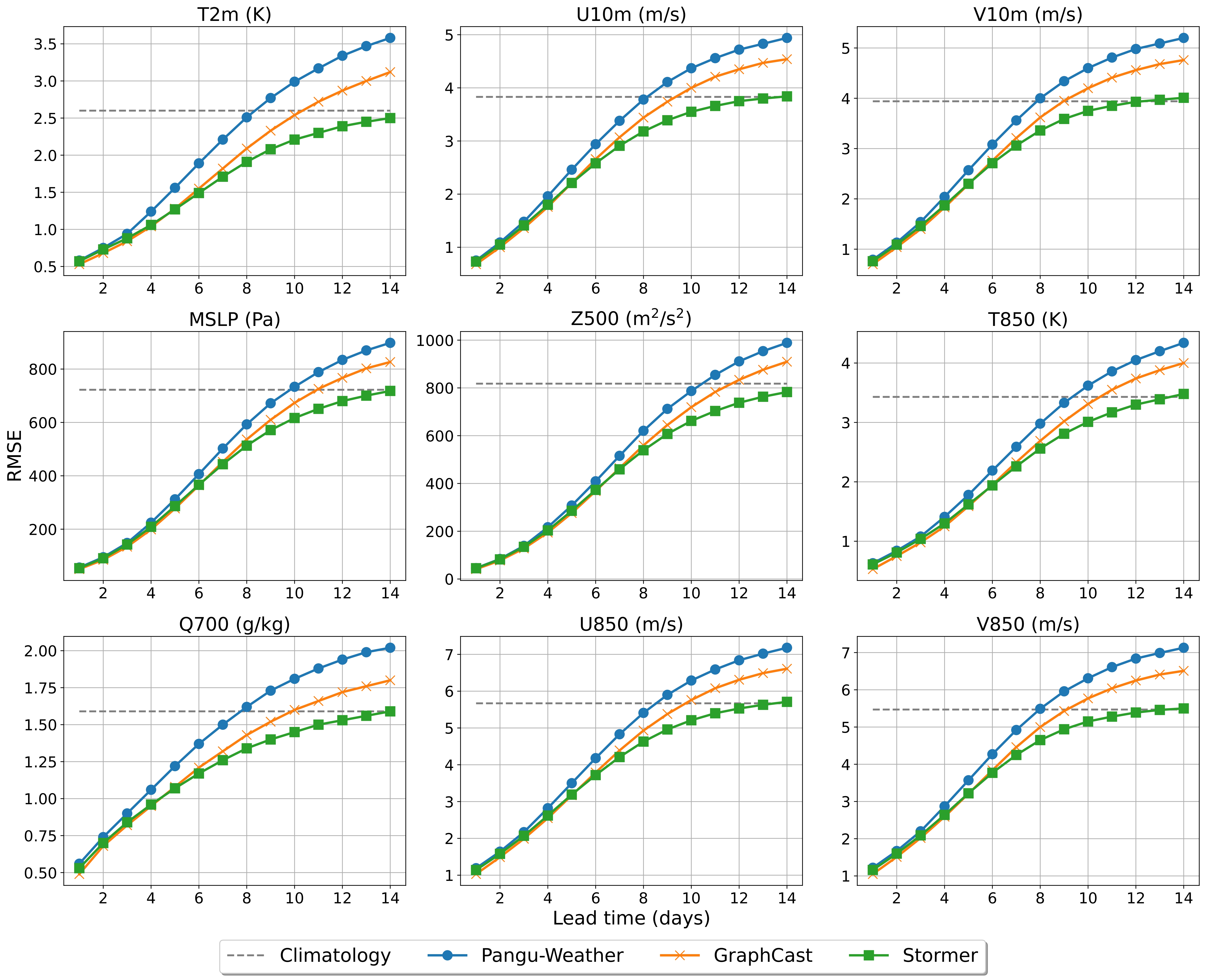

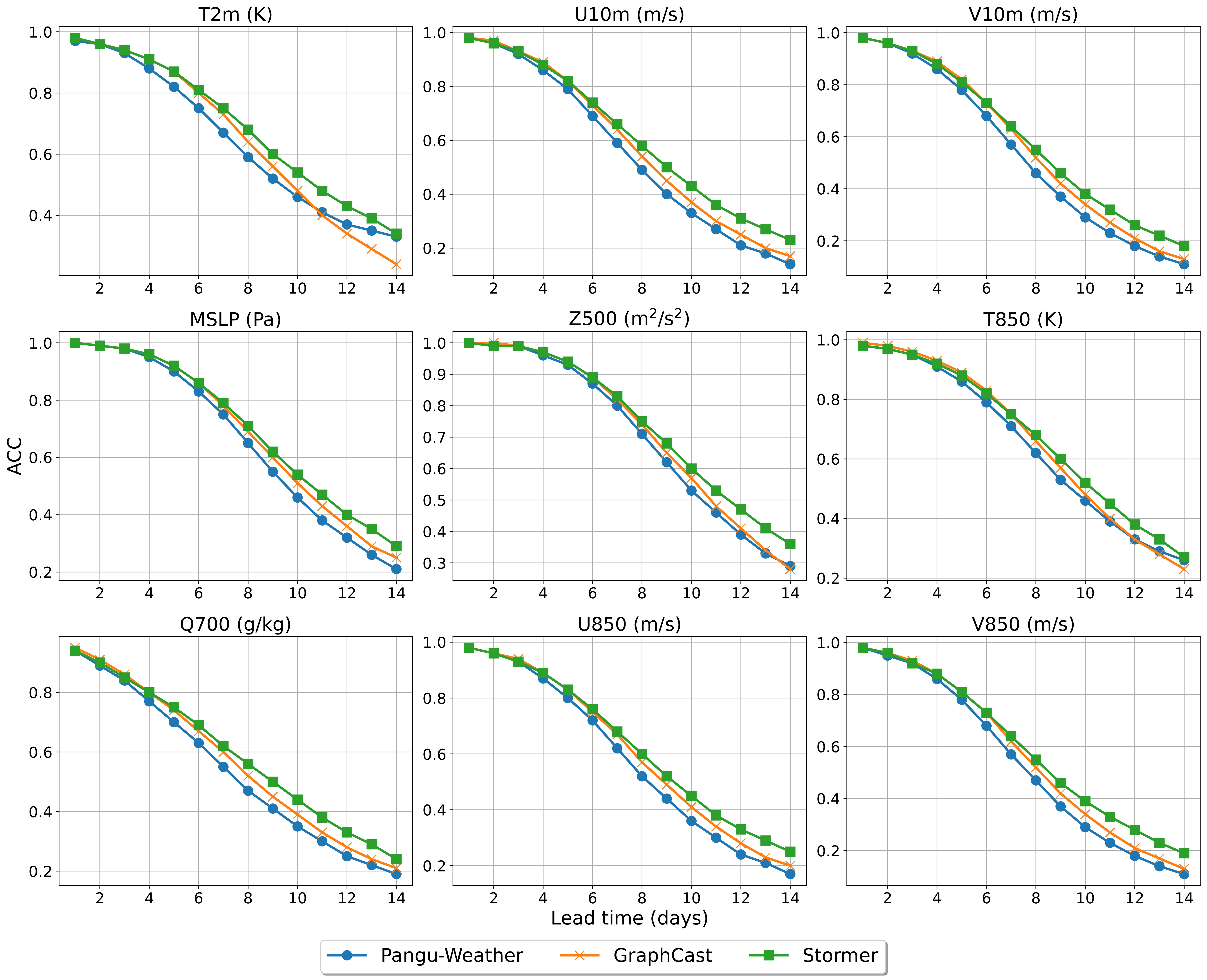

accuracy. On WeatherBench 2, Stormer performs competitively at short to medium-range

forecasts and outperforms current methods beyond 7 days, while requiring orders-of-magnitude

less training data and compute. Additionally, we demonstrate Stormer’s favorable scaling

properties, showing consistent improvements in forecast accuracy with increases in model size

and training tokens. Code and checkpoints will be made available.

|